Reflection on two years of writing evergreen notes: not optimal for skill acquisition and learning

Reference frames, ontologies, systems thinking, and an idea of a "mind gardener" tool

Evergreen notes don't reflect the mental maps of one's mind

I've created more than a thousand evergreen notes in about two years. This has been very useful in my learning and exploration, without a doubt.

However, I don't feel that the practice of taking evergreen notes significantly improved my mental ability to "connect the dots":

After taking an evergreen note, the usual practice is to think what already existing notes are related to the new note, and link them. I often struggle to recall more than a single related note. However, through that first link, I can explore the "link closure" and often find many more related notes.

I regularly create a note and then through related links, I discover that almost exactly the same note already exists. I completely forget about it when I create a new note.

Unfortunately, I don't have statistics of the graph of my notes, but I'd expect the average number of links to be 6 and the median is 3. For me personally, this graph is a really valuable body of information and links. I'm often amused to explore it as if it was not me who wrote it.

These observations make me think that I create an interesting garden of evergreen notes which is, however, weakly related to the mental maps of concepts, or reference frames as Jeff Hawkins calls them in A Thousand Brains. In other words, evergreen notes don't fulfil the role of gardening and shaping my mental maps, and thus don't improve my "bare" thinking (thinking that I can do without consulting to my web of evergreen notes.

Evergreen notes and Zettelkasten are good for "frontier thinking", but not for learning a practice or a domain

The fact that evergreen notes don't reflect the mental maps of one's mind (Zettelkasten notes also don't, for that matter) is at least partially by design. Andy Matuschak writes that "notes should surprise you":

If reading and writing notes doesn’t lead to surprises, what’s the point? If we just wanted to remember things, we have spaced repetition for that. If we just wanted to understand a particular idea thoroughly in some local context, we wouldn’t bother maintaining a system of notes over time.

Quoting the page from the official Zettelkasten website:

If you look something up in your Zettelkasten, you need to get unexpected results in order to form new thoughts. Surprise is the key ingredient here, as I pointed out in my introductory post on this topic. The links between notes make this possible since you’ll generate new ideas by following connections and exploring a part of your web of notes. The non-apparent connections are generally more beneficial to creative thinking than the obvious ones as they generate greater surprise. While your mind usually continues to work with the obvious, your Zettelkasten instead shows you the bizarre. It sparks your imagination and blows your mind as it confronts you with the unexpected.

It's important to note here is that both Andy Matuschak and Niklas Luhmann use their note-taking systems for doing original research, whereas at least 80% of my notes are unoriginal and reflect ideas and concepts I learn from books and elsewhere. I use the evergreen note system for learning a practice or a domain and as my external memory. My main goals are improving and increasing my memory capacity, making thinking and recalling things faster and more reliable (not forgetting things I shouldn't forget about).

Therefore, it seems to me that while evergreen notes and Zettelkasten are good systems for doing original research or "frontier thinking", these note-taking systems are not optimal for training one's mind in fluent and reliable thinking in terms of a certain (professional) practice (for example, deliberating about security threats when considering certain IT solutions: a practice of security engineering) or learning the existing body of knowledge in a certain domain (for example, quantum computing).

The current state-of-the-art idea for a tool that helps one to learn a new practice or a domain is a mnemonic medium, a text which embeds a spaced repetition memory system.

Below in this post, I propose an idea of a new tool for thought, a development of the idea of a spaced repetition system that leverages the preliminary insights about the topology of human memory and the nature of thinking from Jeff Hawkins's “the thousand brains” theory.

The actual structure of my evergreen notes: it's a mess

I use Notion for my evergreen notes so I cannot explore the graph of notes visually (Notion doesn't support this feature yet), but I think that the graph resembles a quite random (no structure) and has very uneven density. There are many cliques of 5-10 notes that are almost fully interconnected. There are larger clusters, comprising several to a dozen such cliques. And there is also a big portion of notes that have only one or two links. On the other hand, there are many notes that have more than ten links.

This is a rough illustration of how the actual web of my evergreen notes should look (I think):

Andy Matuschak writes that evergreen notes should be concept-oriented. However, in practice, I see that both my and Matuschak's notes gravitate towards the following four types of notes (examples are from my notes and from Matuschak's):

Concept-oriented notes: Loss of lithium inventory, Evergreen notes.

"Statement" notes: Electrode voltage curves are steeper when the electrode has little lithium, Most people take only transient notes.

Imperative notes: BMS should use the remaining capacity estimate to narrow down the battery's charge and discharge voltage limits, Evergreen notes should be densely linked.

List/"outline" notes: Positive feedback loops of cell degradation, Similarities and differences between evergreen note-writing and Zettelkasten.

See Matuschak's Taxonomy of note types.

My evergreen notes (and, evidently, Matuschak's) are not ontologically structured: a typical note with a lot of links, e. g. Lithium plating (currently has 15 links) has a lot of links with all sorts of ontological relationships to the concept note:

Lithium plating → Cell capacity fade: caused-by

Lithium plating → Cell degradation: mechanism-of (transitively via Cell capacity fade)

Lithium plating → SEI layer on anode: appear/happens-beneath (spatial relationship)

Lithium plating → Anode overhang decreases the risk of Lithium plating: no relationship because the linked note is not concept-oriented. If there was a concept-oriented note "Anode overhang" (there is no), the relationship would be risk-reduced-by. If there was additionally a separate page "Risk of Lithium plating", the relationship would be simply reduced-by.

Mental reference frames of concepts have at most seven "features"?

Jeff Hawkins writes in A Thousand Brains:

Each cortical column can learn models (maps) of complete objects.

Objects are defined by sets of observed features in the upper layer of a cortical column associated with a set of locations of/on the object (lower layer of neurons). If you know the feature, you can determine a location. If you know the location, you can predict the feature.

"Features" are actually links to other reference frames, so it's reference frames all the way down. Neocortex used hierarchy to assemble objects into more complex objects.

Each cortical column can learn hundreds of models.

Reference frames are used to model everything we know, not just physical objects. Thinking is moving between adjacent locations in a reference frame. All knowledge is stored at locations in reference frames.

Attention plays essential role in how the brain learns models. All objects in attention are constantly added to models, either temporary or not.

Note that this last quote implies (I think Hawkins also points this out more explicitly at some point) that "current working memory" is a temporary reference frame.

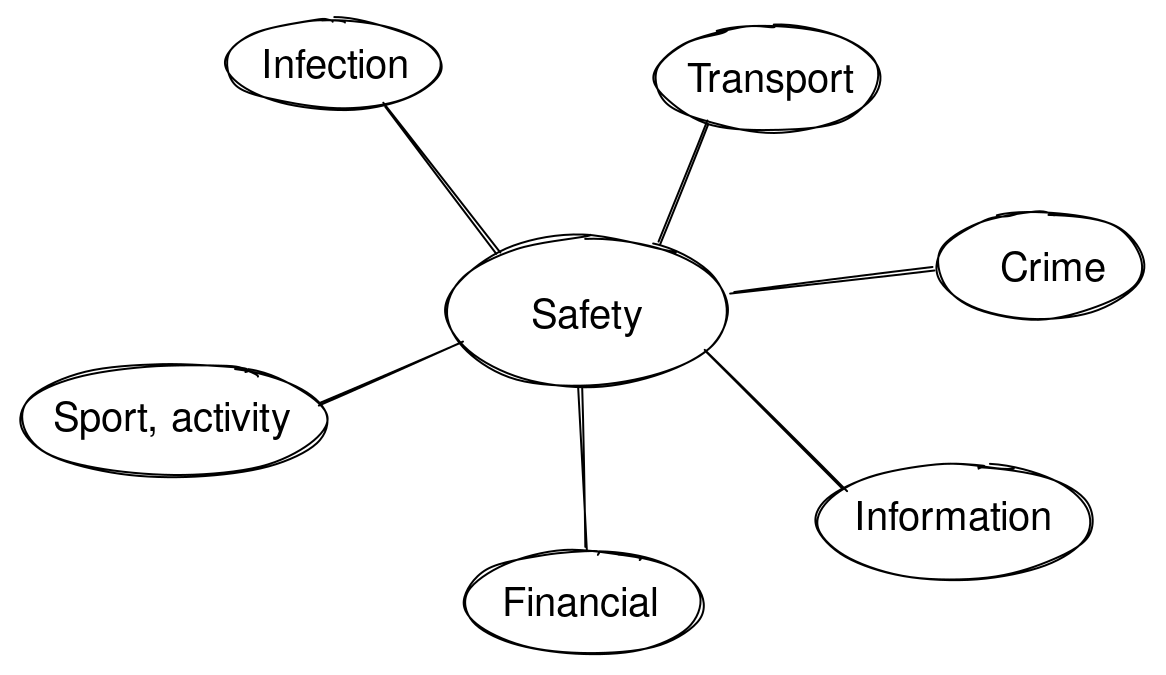

Hawkins's ideas together with the idea that people can only hold about seven objects in working memory lead me to a speculative conclusion that reference frames of abstract objects (concepts) should look like stars of at most seven points. Some examples:

In my mental model/mental map/reference frame of "aspects/types of safety", there are no "animals". If I lived in the Amazonian jungle, this map would probably include "animals", "insects", "river", "food", and "neighbouring tribe", i. e. would be completely different.

"Rocket" or "hyperloop" are not featured on my reference frame of the means of transport, but probably are present on the equivalent reference frame in Elon Musk's mind.

I think that I feel that reference frames with more than seven concepts don't exist in my mind. For example, the "software quality" is not a reference frame in my mind, I cannot navigate it, I cannot extract its features easily from my memory, despite I've once spent several weeks thinking almost exclusively about this topic, and documented it on Wikiversity. To talk and think about software quality, I need to refer to some notes, wiki pages, and diagrams constantly.

Of course, the knowledge about different software qualities is stored somehow in some reference frames in my mind because if I think long and hard, I can extract, perhaps, 15-20 qualities from my memory. But there is probably some implicit second hierarchy of concepts between the concept "software quality" and the actual qualities, or, more likely, some mess of "proto-", "fuzzy" frames. Mental navigation in such a landscape is energetically hard, it is "real thinking". The mental navigation in crisper, simpler frames of at most seven features is effortless, "automatic".

The first implication of this conclusion is that overly connected evergreen notes don't reflect actual reference frames in my mind and therefore don't serve the mind in "mental navigation", recall, solution finding, etc.

The second implication is the new way to look at the famous "two-pizza team" rule. Traditionally, the team size of 8 is justified by the growing number of communications. But maybe people cannot place more than 7 colleagues well on their "team" reference frame?

There are also powerful implications related to systems thinking which I cover below in this post.

Hierarchical visual/spatial reference frames are also limited to 7-9 features on a single level of the hierarchy?

I suspect that even visual reference frames may not contain much more than 7 or 9 features. They all seem to be hierarchical. For example, all countries are not the direct features on the "world" reference frame. Instead, the first level is continents/parts of the world: North America, South America, Europe, Asia, Africa, Australia/Oceania. Then, within Europe, there are Scandinavia, Romanic-speaking Europe, Central Europe, Balkans, Eastern Europe. (This is my best attempt to reflect on my own mental map of Europe, in geographical/visual ontological dimension. It's admittedly inconsistent because I also want to extract Iberia and the British Isles. Probably most of our mental models are slightly "fuzzy", inconsistent. In the aspects of thinking where the speed and quality of thought matter, we should probably work on making them more consistent!) Then, within each sub-region, there are specific countries.

In another visual/spatial reference frame, my room, there are areas "close to bed", "close to the table", "close to the window", "close to the wardrobe".

Perhaps, one of the reasons why the mind can think visually/spatially quicker than abstractly and remember more things is that the mind doesn't need to switch the type of relationship when it moves through the hierarchy: it remains spatially-part-of/located-within/located-near. Per Hawkins, this constitutes a single reference frame(?), and thinking is the very act of movement within this frame. On the other hand, abstract thinking seems to sometimes cross a boundary of two reference frames. For example: Anode overhang decreases the risk of Lithium plating: "Lithium plating" → (object-of-attention/alpha) "Risks of Lithium plating" → (reduced-by) "Anode overhang", two different reference frames are involved in this idea/thought.

Features in the reference frames have the same type of relationship with the concept

Another difference between the actual reference frames in my mind and evergreen notes that I create is that reference frames are approximately ontologically consistent: all features in a "star frame" are connected with the concept with approximately the same ontological relationship.

This seems to be another reason why evergreen notes don't reflect the actual structure of mental maps and don't seem to increase the speed of bare thinking. Most of my evergreen notes are either statements (what is) or imperative notes (what should be). Both these types of notes represent compete thoughts, or "walks"/"pathways" across some implicit reference frames. Often these notes are linked to each other with a relationship "related-to", which is a relationship between thoughts/ideas, not concepts. My mind doesn't seem to build a lot (if any) of reference frames in the related-to dimension. This is why, I think, I keep having a hard time recalling things that I wrote in my evergreen notes.

Explaining the power of systems thinking practices using reference frames

Anatoly Levenchuk's book Systems Thinking (available as a course in English here, as a book in Russian here) has many powerful ideas. Some of them, as I now think, can be explained nicely with the speculative insights about reference frames in people’s minds (which I covered above).

Extracting system levels is a mind-gardening practice

A systems thinker should carefully "extract"/separate system levels among different dimensions: functional, constructive, spatial, economic, and more.

A nice property of the resulting system breakdowns is that they rarely have more than seven components/sub-systems. For example, here's a possible functional breakdown of a car:

The functional sub-systems of "Seating and climate control" system could be "windows", "roof/doors", "seats", "climate control".

In just about any system and any kind of system breakdown, it should be a rare situation when there are more than seven functional/constructive/spatial/cost/etc. components in a system and no intermediate/hierarchical system levels could be plausibly extracted.

The punch line is, of course, that these breakdowns with seven or fewer features can be directly represented in the minds of the people who work with the system as a single reference frame. This makes thinking about the system more economical and predictable: people would be less likely to forget about components even if they don't constantly refer to diagrams and text.

Communication should become more reliable with shared reference frames

Levenchuk stresses in the book that system breakdowns should be shared/agreed upon/collaboratively created by all people who work on the system. And while it's a truism that alignment reduces the need for micro-coordination in projects, the additional benefit of aligning on system breakdowns is that if team members and stakeholders all internalise them as singular reference frames in their minds, communication becomes even more efficient and losses decrease. Communication without alignment on system breakdowns looks like this:

Vague/fuzzy/idiosyncratic topology of reference frames in the mind of person A → Language (as produced by person A: phrases, speech, text) → (losses in speaking/writing/reading/hearing) → Language (as perceived by person B) → Vague/fuzzy/idiosyncratic topology of reference frames in the mind of person B.

The problem here is that since the topology (and the set) of reference frames in the mind of person B is different from those in the mind of person A, any losses and mistakes are exacerbated. Also, in long chains of communication, person A → person B → person C, the message gets transformed because there is no shared internal "base" in the minds of these people. This is the Chinese whispers effect.

When, on the contrary, people have similar reference frames of system breakdowns in their minds, mistakes during communication are likely contained much better: the receiver needs to "pigeonhole" the message into the same topology of reference frames as from which it originated. Mistakes in the message (remember: a message is a pathway in or across the reference frames) should often be effectively "error-corrected" to the original message. Also, multi-stage communication when all people in the chain share a map of reference frames (system breakdowns) should be much more reliable: errors should not amplify as quickly as in the case when these people don't share reference frames.

The reference frames of project alphas

In systems thinking terminology, alpha is "an important object of attention" (whose progress should be tracked to track the overall progress of the project or assess the health of the project). Alpha-of and sub-alpha-of are the corresponding types of ontological relationships.

Seven main alphas can be extracted in almost any project (not necessarily software development project, albeit the framework of alphas (OMG Essence) was originally developed by Ivar Jacobson for software projects):

Seven alphas nicely fit in a single mental reference frame (which, again, makes thinking quicker, more economical, and more reliable):

Every project lambda has its own sub-alphas, those sub-alphas have their own sub-alphas, and so on. All these breakdowns can be mental reference frames, too.

By the way, I don't feel that there are "horizontal", mesh-like connections between features in these reference frames of abstract concepts, as depicted in the picture above. At least, not in my mind. At best, there are many proto-frames with just one feature, but all these frames are weakly connected to each other and therefore don't aid thinking (again, this is according to my reflective feeling only). It might be that I didn't acquire the necessary mental tools/habits to track such "horizontal" relationships in the reference frames. Alternatively, I may belong to a part of the population that lacks such an ability. Finally, this might be universal for all human minds.

Strengthen the intelligence by growing the repertoire of reference frame dimensions

Although it seems to me that the number of features on a single reference frame attached to a concept is limited to seven or so, the overall density of the concept graph, and thus the speed and the quality of thinking can be improved by increasing the number of reference frames attached to every concept. As Hawkins wrote, the brain can handle this easily: a single cortical column can learn and store hundreds of reference frames. (This doesn't imply that cortical columns responsible for abstract thinking are dedicated to a single concept each: I think Hawkins didn't assert this in the book.)

In Systems Thinking, Levenchuk introduces several types of relationships that people usually don't think about: functional-part-of, constructive-part-of, spatial-part-of, cost-part-of, sub-alpha-of. Use them in system breakdowns to increase the density of the concept graph in your mind.

Systems thinkers should also deliberately seek new types of relationships relevant to a particular project (system, area of research), document them, and build system breakdowns (i. e., reference frames) using these new dimensions.

The idea for a new tool for thought: "mind gardener", AI assistant that helps to build a topology of reference frames in the mind of a learner

The first users would be individuals who learn some complex domain (e. g. a professional practice, or a field of science) and want to make their thinking in this domain quicker, more reliable, and more economical energetically. In other words, these are the same people who Andy Matuschak targets with mnemonic medium: the mnemonic medium should be developed in a context where people really need fluency.

The tool helps learners to deliberately build and garden their mental maps (reference frames) in a certain domain, heavily leveraging natural language models and applying natural language inference to overcome the failure weaknesses of the earlier attempts at building such tools.

In terms of the interface, I think the tool should be like a visual graph modeller and explorer, similar to TheBrain tool, rather than a text editor. However, I think that the tool should impose two important limitations:

Different reference frames (based on different types of relationships between the concept and features, i. e. different ontologies) should be clearly separated and, perhaps, even not appear on the same screen. TheBrain tool doesn't separate ontologies, all connections live in the same visual space (just as the links between my evergreen notes are all similar). I think this confuses the reference frames in the learner's mind.

Don't allow more than seven features in a single reference frame. A deliberate limitation, a-la Twitter's 140-symbol limit, should ensure that the tool actually reflects the reference frames in the learner's mind, rather than drifts towards an elaborate, explorative web of knowledge detached from the learner's mind.

The tool should be probabilistic and leverage natural language models heavily

I think a big drawback of existing ontology editors is that they are too formal and not probabilistic and imprecise enough. The concepts in the proposed tool should be nebulous by default: a concept in a tool doesn't have a single fixed title (unlike evergreen notes, whose titles are like APIs). When a learner adds a new concept using a particular word or phrase, the tool automatically expands it into a cloud of words and terms (using a language model), and coalesces (or probabilistically attaches) the concept with already existing concepts: e. g., if the learner added "public transport" and "bus" in different reference frames, they should automatically attach to each other with some probability.

The tool should find a very fine balance between the relative formalism/strictness of ontologies (every reference frame should be ontologically consistent) and the fuzziness of associations, nebulosity of concepts. (Here, I adapt Levenchuk's idea with regard to why formal architecture modelling tools lose to more informal tools such as coda.io.)

The tool could also use the language model to automatically choose the phrase or the word which is most appropriate to denote the concept in a particular reference frame view.

The tools could also leverage the language model (primed with the books and other written materials on the domain being studied, e. g., a web of evergreen notes) to suggest to the learner new nodes in reference frames:

When the type of relationship is not explicitly specified by the learner (for example, on the reference frame above, it is not explicitly said that the relationship between "Li-ion cell" concept and the features is functional-part-of) the tool may derive it automatically using its language model and annotate the relationships in the navigation interface.

The tool may also suggest to the learner to add new reference frames to existing concepts: "You have added a functional breakdown of a Li-ion cell, would you like to add constructive breakdown now?"

It's perhaps not within the reach of the state-of-the-art natural language models existing today to suggest to the learner how to optimise the concept structure, extract new concepts (and perhaps even suggest new original words for these concepts, using some morphological models!), or coalesce concepts, but in five years from now, AI should definitely help human learners to improve the structure of their mental reference frames.

At present, the tool should at least try to make reorganising the topology of concepts as easy as possible, automate it: I think that "structure ossification" is a big issue with most existing knowledge management, spaced repetition, and note-taking systems. Perhaps, the probabilistic nature of the tool could be helpful here.

Authored sets of reference frames (mental maps) in a mnemonic medium

Many types of prompts which Andy Matuschak describes in "How to write good prompts: using spaced repetition to create understanding" elucidate different parts of conceptual reference frames: many "simple fact", conceptual prompts, and all list prompts invite the learner to recall features of some reference frame (and procedural prompts train sequence memory, which the proposed new tool could support, too, but discussing it is out of scope of this post).

Therefore, it seems that a mnemonic medium with a good set of prompts presupposes a relatively well-defined set of reference frames (in the mind of the author of the mnemonic medium), but stops short of trying to impart these reference frames to the learners more explicitly than "by example", i. e. via prompts and spaced repetition of these prompts.

I don't see a particular value in striping learners of the ability to explore the reference frames more directly, once they finished writing a mnemonic medium or a course. So, the proposed tool may be integrated with mnemonic mediums: it could be loaded with a set of reference frames which the learner should "fill in"/"unlock", like in a computer game.

Spaced repetition

Of course, the tool should include spaced repetition features to help learners to drive the reference frames into their minds, and retain these reference frames over time.

The tool could automatically generate conceptual, list, simple fact, and procedural prompts from the structure of reference frames and mapped concepts.

A tool may also have another interesting type of "memory drill" which most spaced repetition systems currently don't: find one or several "pathways" through reference frames between two concepts, e. g. Cell degradation and "Anode overhang":

Cell degradation → (mechanism) Lithium plating → (alpha) Risk of Lithium plating → (decreased-by) Anode overhang;

Cell degradation → (process-in) Li-ion cell → (functional-part) Anode → (spatial-part) Anode overhang.

This is similar to the six degrees of Wikipedia game.

The tool may analyse the pathways that the learner suggests to determine which dimensions (ontologies) the learner doesn't use and likely forgot to inform the future spaced repetition prompts.

Conclusions

Personal webs of “evergreen” notes and Zettelkasten notes are very useful for exploring new ideas and doing “frontier thinking”, but are not optimal to increase the fluency and reliability of thinking in a known domain, such as an area of research or a professional practice. This is because the structure of notes doesn’t reflect well how the knowledge is stored in the human mind.

I speculate that conceptual knowledge is stored in “reference frames” that look like a concept in the middle connected to at most seven features. The type of relationship between the concept and the features (features are other concepts) is more or less consistent within a reference frame.

Therefore, I suggest that overly connected “notes” or concepts in a personal ontology editor are not as “good” in speeding up one’s thinking, as, for example, overly connected “hub” airports in speeding up air travel. Reference frames with more than seven features probably don’t exist or work poorly.

In systems thinking (creating system breakdowns) and designing one’s own reference frames for thinking in a certain domain, strive to extract concepts and breakdowns so that the “fanout order” in the topology always stays below seven. Increase the overall connectivity of your “mental map of concepts” by adding new reference frames (mapping out new ontological relationships between concepts), not by adding more features to existing reference frames.

Finally, I propose an idea for a new tool for thought, building upon the features of ontology editors and spaced repetition systems, that leverages state-of-the-art natural language models to help learners to build and “garden” the reference frame maps in their minds.

It looks like you had an idea that Evergreen notes or Zettelkasten will improve your “bare” thinking. I have a different impression. Each of us has our own limitations on how many objects we can keep in working memory, how well we can operate with them, how fast we can think, and so on. Those abilities fluctuate. If I’m exhausted or ill, I see significant limitations on my ability to think. The same limitation applies if I injure my ankle, which limits my ability to run and jump.

We have tasks that are too complex for our “bare” thinking even under ideal conditions. So, we use “tools” like thinking on paper, using frameworks, and so on. One part of Zettelkasten is collecting data and building relationships between it (and most articles are dedicated to that part). The other part is deliberate practice: working through this data and these relationships, and trying to develop new ideas (this part is often omitted or expected to happen accidentally without applying effort). So, for me, Evergreen notes or Zettelkasten won't help you to improve "bare" thinking, but rather to overcome some of its limitations.

> I don't feel that there are "horizontal," mesh-like connections between features in these reference frames of abstract concepts, as depicted in the picture above. At least, not in my mind.

Yes, it’s difficult to analyze such relationships in one’s mind, but it’s much easier when you think on paper. You can “isolate” some parts and think through them, not worrying about forgetting or losing some other good ideas, and also being able to quickly change context in your mind.

In relation to the “strictness of ontologies,” I have two ideas. First of all, those connections are characterized by the person who creates such relationships, not by the objects or the tools used. You and I can find different relationships in the same data, and that reflects different life experiences and thinking processes. The second idea is that unexpected connections from seemingly unrelated domains can help to look at a task at hand from a new point of view, and in this way advance in solving it. Associative thinking may be very helpful.

Has your perspective changed (and how) after three years from the moment when you wrote this article?

I'm a heavy Anki user and spaced repetition learning is extremely powerful but it also has some serious weaknesses. It is super easy to remember facts, but if you are not careful, you only know, but you don't understand anything. So it is easy to jam tons of tiny facts in your head without forming any connections between them.